SVRnet+

Pose Estimation on a Riemannian Manifold

Project Details

- Publication : MICCAI 2018

- Date Published : 26th September 2018

- DOI : 10.1007/978-3-030-00928-1_85

- Code : Github

Computing CNN Loss and Gradients for Pose Estimation with Riemannian Geometry

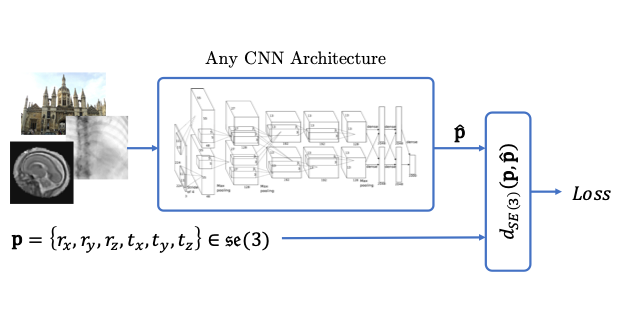

Pose estimation, i.e. predicting a 3D rigid transformation with respect to a fixed co-ordinate frame in, SE(3), is an omnipresent problem in medical image analysis. Deep learning methods often parameterise poses with a representation that separates rotation and translation. As commonly available frameworks do not provide means to calculate loss on a manifold, regression is usually performed using the L2-norm independently on the rotation’s and the translation’s parameterisations. This is a metric for linear spaces that does not take into account the Lie group structure of SE(3).

In this paper, we propose a general Riemannian formulation of the pose estimation problem, and train CNNs directly on SE(3) equipped with a left-invariant Riemannian metric. The loss between the ground truth and predicted pose (elements of the manifold) is calculated as the Riemannian geodesic distance, which couples together the translation and rotation components. Network weights are updated by back-propagating the gradient with respect to the predicted pose on the tangent space of the manifold SE(3). We thoroughly evaluate the effectiveness of our loss function by comparing its performance with popular and most commonly used existing methods, on tasks such as image-based localisation and intensity-based 2D/3D registration. We also show that hyper-parameters, used in our loss function to weight the contribution between rotations and translations, can be intrinsically calculated from the dataset to achieve greater performance margins.